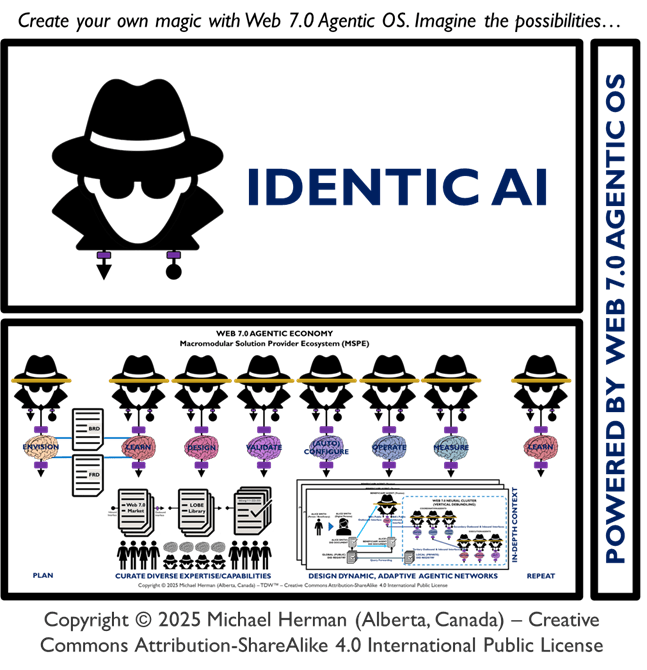

Create your own magic with Web 7.0™ / TDW AgenticOS™. Imagine the possibilities.

Copyright © 2025 Michael Herman (Bindloss, Alberta, Canada) – Creative Commons Attribution-ShareAlike 4.0 International Public License

Web 7.0™, TDW AgenticOS™ and Hyperonomy™ are trademarks of the Web 7.0 Foundation. All Rights Reserved.

Introduction

This article describes Web 7.0™ and TDW AgenticOS ™ – with a specific focus on the Web 7.0 Neuromorphic Agent Architecture Reference Model (NAARM) used by TDW AgenticOS™ to support the creation of Web 7.0 Decentralized Societies.

The intended audience for this document is a broad range of professionals interested in furthering their understanding of TDW AgenticOS for use in software apps, agents, and services. This includes software architects, application developers, and user experience (UX) specialists, as well as people involved in a broad range of standards efforts related to decentralized identity, verifiable credentials, and secure storage.

The Second Reformation

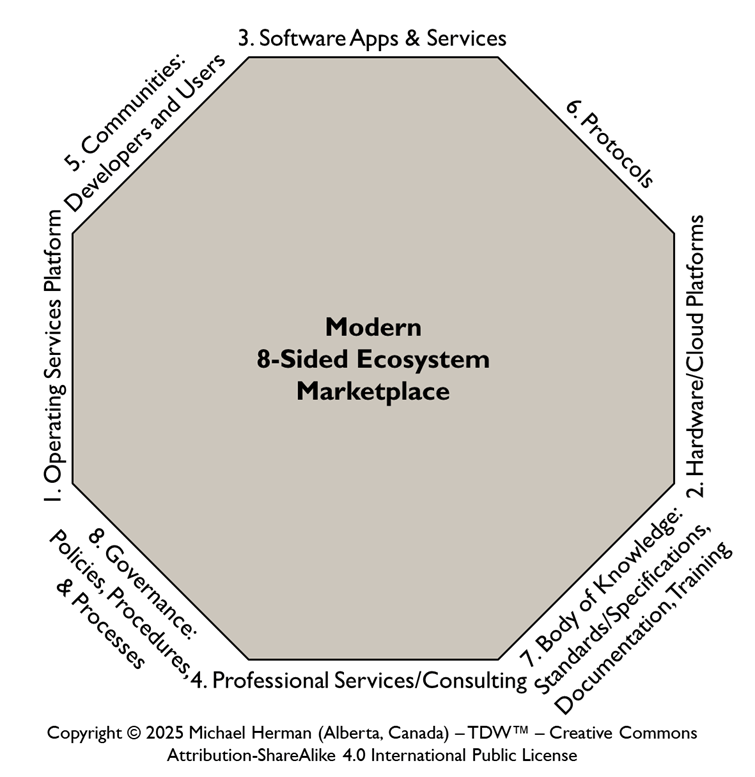

Web 7.0 Foundation Ecosystem

“Web 7.0 is a unified software and hardware ecosystem for building resilient, trusted, decentralized systems using decentralized identifiers, DIDComm agents, and verifiable credentials.”

Michael Herman, Trusted Digital Web (TDW) Project, Hyperonomy Digital Identity Lab, Web 7.0 Foundation. January 2023.

TDW AgenticOS™

TDW AgenticOS™ is a macromodular, neuromorphic agent platform for coordinating and executing complex systems of work that is:

- Secure

- Trusted

- Open

- Resilient

TDW AgenticOS™ is 100% Albertan by birth and open source.

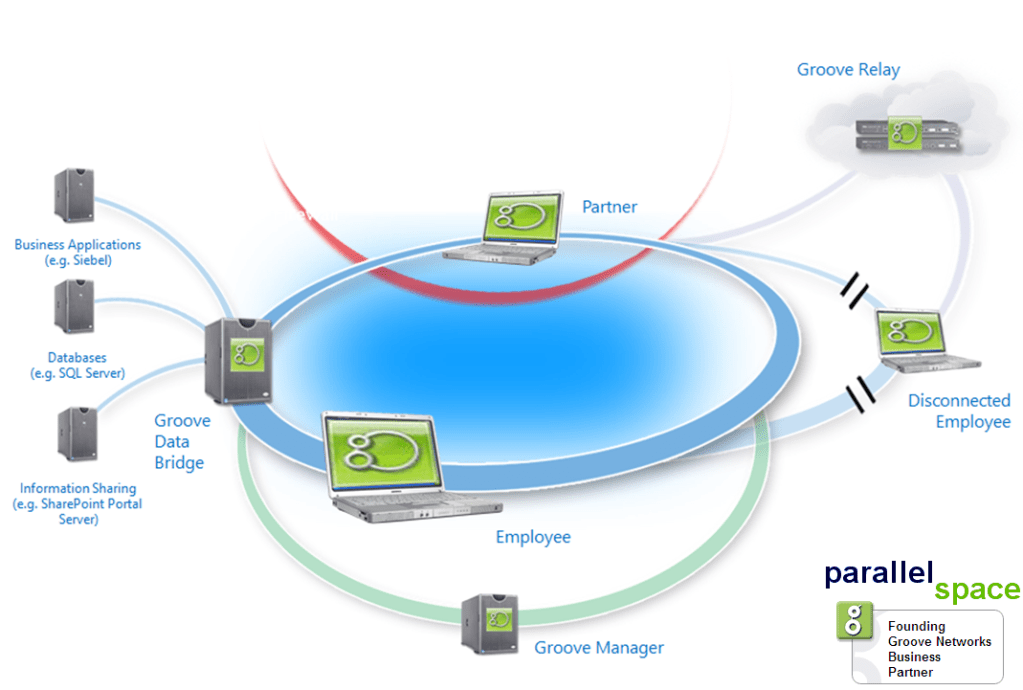

Project “Shorthorn”

Project “Shorthorn” is a parody project name based on Microsoft’s Windows “Longhorn” WinFS project (a SQL-based Windows File System project) with which the author was involved in from a design preview and feedback, consulting, and PM technical training (Groove Workspace system architecture and operation) perspectives (circa 2001-2002).

What makes Shorthorns great:

– They’re good at turning grass into meat (great efficiency).

– Shorthorn cows are amazing mothers and raise strong, healthy calves (nurture great offspring).

– Their genetics blend well with other breeds for strong hybrid calves (plays well with others).

…and so it is with TDW AgenticOS™.

Web 7.0 Foundation

The Web 7.0 Foundation, a federally-incorporated Canadian non-profit corporation, is chartered to develop, support, promote, protect, and curate the Web 7.0 ecosystem: TDW AgenticOS operating system software, and related standards and specifications. The Foundation is based in Alberta, Canada.

What we’re building at the Web 7.0 Foundation is described in this quote from Don Tapscott and co.:

“We see an alternate path: a decentralized platform for our digital selves, free from total corporate control and within our reach, thanks to co-emerging technologies.”

“A discussion has begun about “democratizing AI.” Accessibility is critical. Mostaque has argued that the world needs what he calls “Universal Basic AI.” Some in the technology industry have argued that AI can be democratized through open source software that is available for anyone to use, modify, and distribute. Mostaque argues that this is not enough. “AI also needs to be transparent,” meaning that AI systems should be auditable and explainable, allowing researchers to examine their decision-making processes. “AI should not be a single capability on monolithic servers but a modular structure that people can build on,” said Mostaque. “That can’t go down or be corrupted or manipulated by powerful forces. AI needs to be decentralized in both technology, ownership and governance.” He’s right.”

You to the Power Two. Don Tapscott and co. 2025.

A Word about the Past

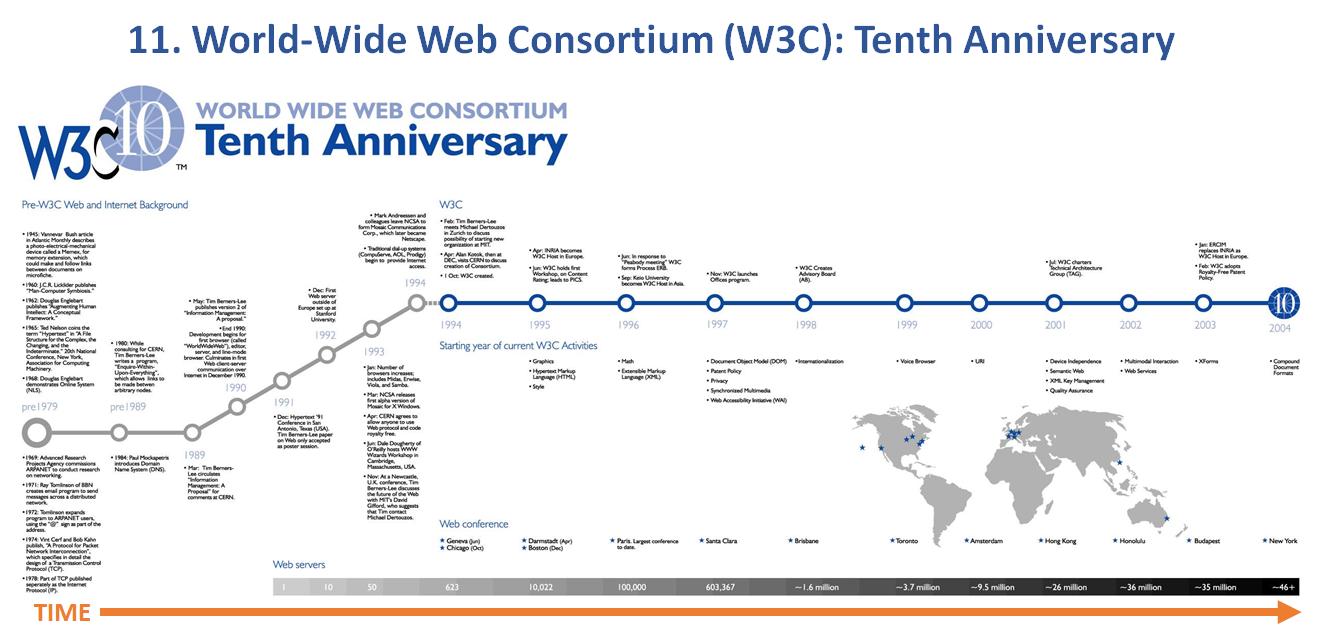

The Web 7.0 project has roots dating back approximately 30 years to before 1998 with the release of Alias Upfront for Windows. Subsequent to the release of Upfront (which Bill Gates designated as the “most outstanding graphics product for Microsoft Windows 3.0”), the AUSOM Application Design Framework was formalized.

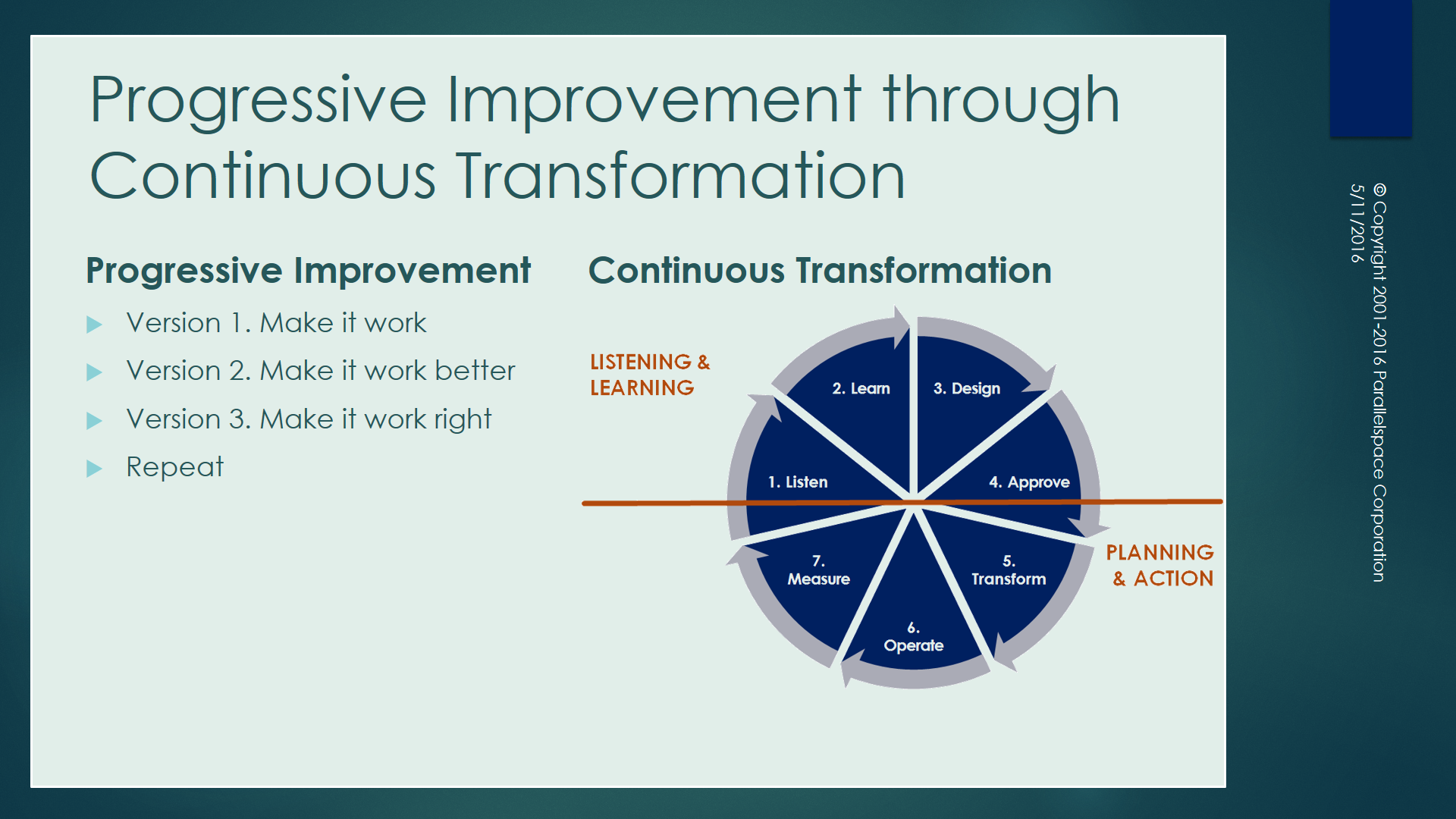

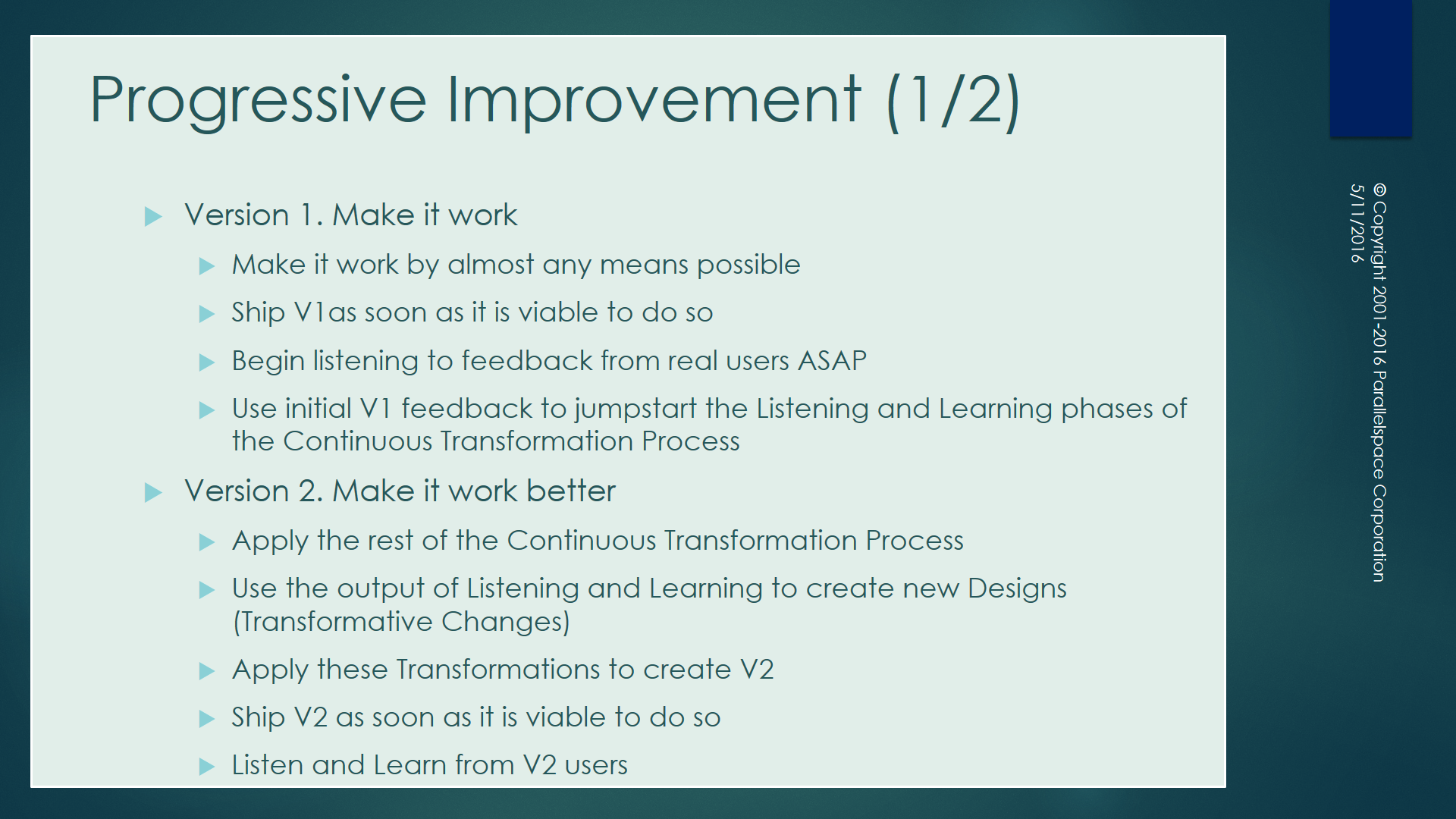

AUSOM Application Design Framework

AUSOM is an acronym for A User State of Mind — the name of a framework or architecture for designing software applications that are easier to design, implement, test, document and support. In addition, an application developed using the AUSOM framework is more capable of being: incrementally enhanced, progressively installed and updated, dynamically configured and is capable of being implemented in many execution environments. This paper describes the Core Framework, the status of its current runtime implementations and its additional features and benefits.

What is AUSOM?

The AUSOM Application Design Framework, developed in 1998, is a new way to design client-side applications. The original implementation of the framework is based on a few basic concepts: user scenarios and detailed task analysis, visual design using state-transition diagrams, and implementation using traditional Windows message handlers.

The original motivation for the framework grew out of the need to implement a highly modeless user interface that was comprised of commands or tasks that were very modal (e.g. allowing the user to change how a polygon was being viewed while the user was still sketching the boundary of the polygon).

To learn more, read The AUSOM Application Design Framework whitepaper.

Einstein’s Advice

The following is essentially the same advice I received from Charles Simonyi when we were both at Microsoft (and one of the reasons why I eventually left the company in 2001).

“No problem can be solved from the same level of consciousness that created it.” [Albert Einstein]

“The meaning of this quote lies in Einstein’s belief that problems are not just technical failures but outcomes of deeper ways of thinking. He suggested that when people approach challenges using the same assumptions, values, and mental habits that led to those challenges, real solutions remain out of reach. Accoding to this idea, improvement begins only when individuals are willing to step beyond familiar thought patterns and question the mindset that shaped the problem.” [Economic Times]

Simonyi et al., in the paper Intentional Software, state:

For the creation of any software, two kinds of contributions need to be combined even though they are not at all similar: those of the domain providing the problem statement and those of software engineering providing the.implementation. They need to be woven together to form the program.

TDW AgenticOS is the software for building decentralized societies.

A Word about the Future

“Before the next century is over, human beings will no longer be the most intelligent or capable type of entity on the planet. Actually, let me take that back. The truth of that last statement depends on how we define human.” Ray Kurzweil. 1999.

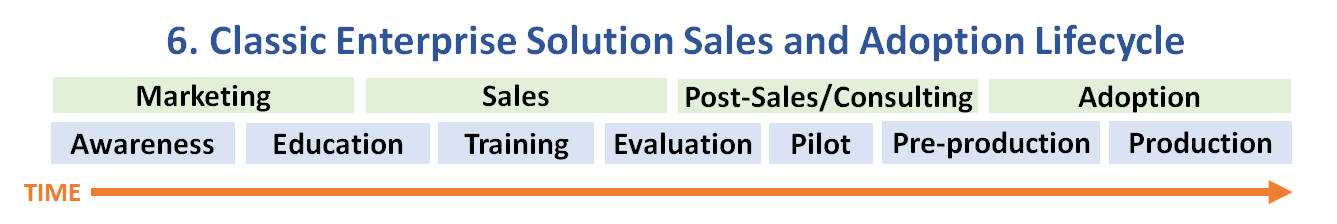

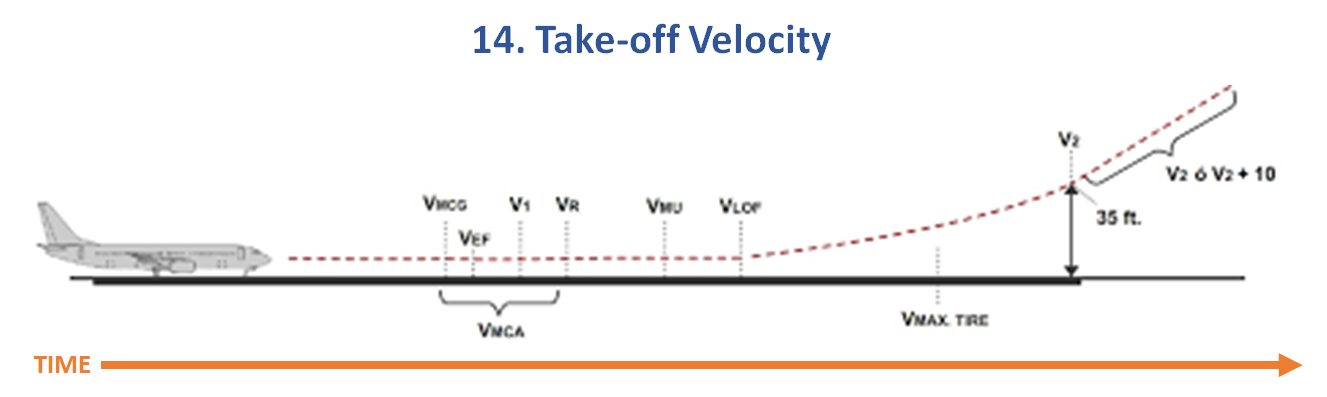

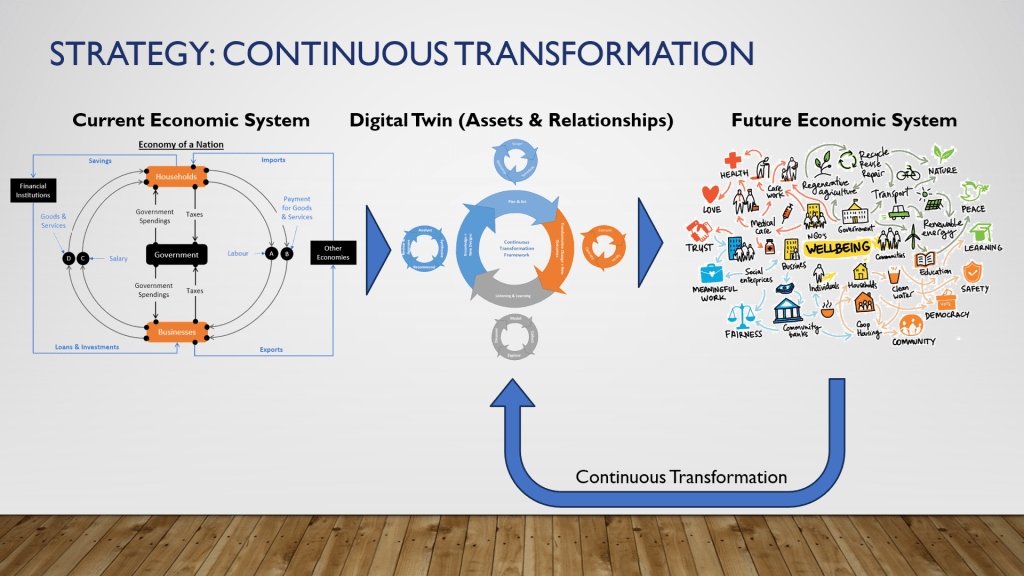

NOTE: “Artificial Intelligence” (or “AI”) does not appear anywhere in the remainder of this article. The northstar of the Web 7.0 project is to be a unified software and hardware ecosystem for building resilient, trusted, decentralized systems using decentralized identifiers, DIDComm agents, and verifiable credentials – regardless of whether the outcome (a Web 7.0 network) uses AI or not. Refer to Figures 4a, 4b, and 6 for a better understanding.

DIDComm Notation, a visual language for architecting and designing decentralized systems, was used to create the figures in this article.

Value Proposition

By Personna

Business Analyst – Ability to design and execute, secure, trusted business processes of arbitrary complexity across multiple parties in multiple organizations – anywhere on the planet.

Global Hyperscaler Administrators – Ability to design and execute, secure, trusted systems administration processes (executed using PowerShell) of arbitrary complexity across an unlimited number of physical or virtual servers hosted by an unlimited number of datacenters, deployed by multiple cloud (or in-house) xAAS providers – anywhere on the planet.

App Developers – Ability to design, build, deploy, and manage secure, trusted network-effect-by-default apps of arbitrary complexity across multiple devices owned by anybody – anywhere on the planet.

Smartphone Vendors – Ability to upsell a new category of a second device, a Web 7.0 Always-on Trusted Digital Assistant – a pre-integrated hardware and software solution, that pairs with the smart device that a person already owns. Instead of a person typically purchasing/leasing one smartphone, they can now leverage a Web 7.0-enabled smartphone bundle that also includes a secure, trusted, and decentralized communications link to a Web 7.0 Always-on Trusted Digital Assistant deployed at home (or in a cloud of their choosing).

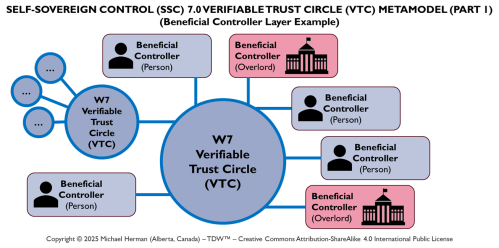

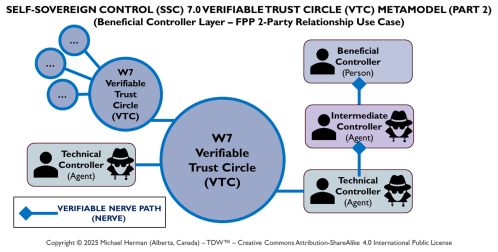

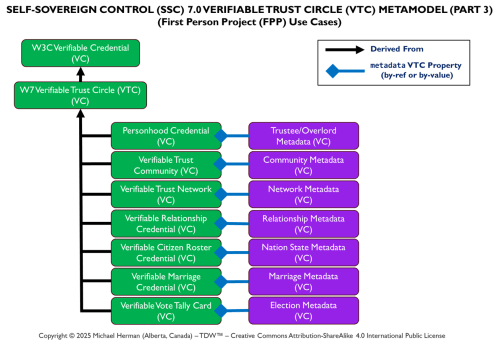

By Trust Relationship (Verifiable Trust Circle (VTC))

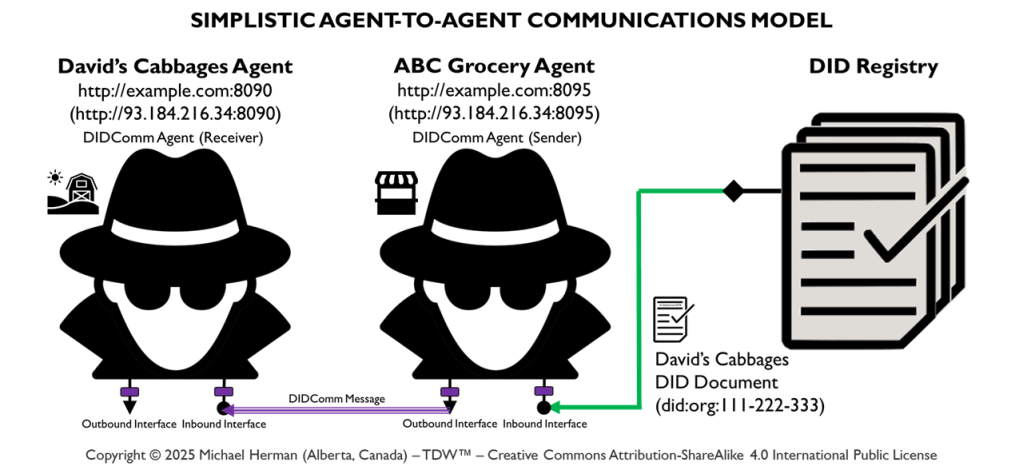

Simplistic Agent-to-Agent Communications Model

Figure 0. depicts the design of a typical simple agent-to-agent communications model. DIDComm Notation was used to create the diagram.

TDW AgenticOS: Conceptual and Logical Architecture

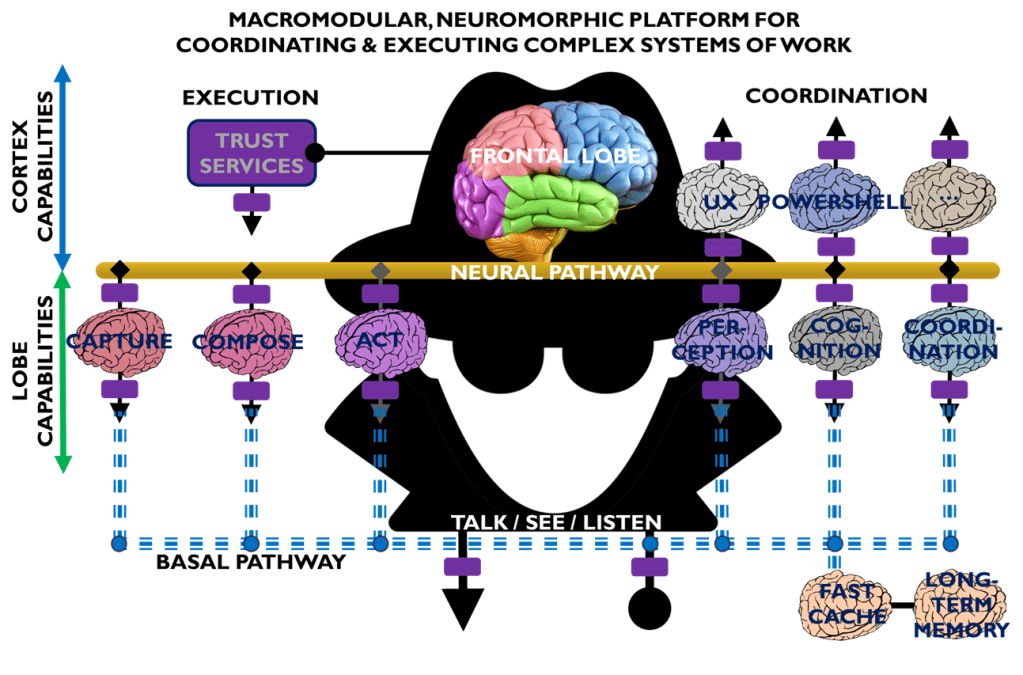

The Web 7.0 architecture is illustrated in the following figure.

Figure 1 is an all-in illustration of the conceptual architecture of a Web 7.0 Neuromorphic Agent. A Web 7.0 Agent is comprised of a Frontal LOBE and the Neural Messaging pathway. An Agent communicates with the outside world (other Web 7.0 Agents) using its Outbound (Talking), Seeing, and Inbound (Listening) Interfaces. Agents can be grouped together into Neural Clusters to form secure and trusted multi-agent organisms. DIDComm/HTTP is the default secure digital communications protocol (see DIDComm Messages as the Steel Shipping Containers of Secure, Trusted Digital Communication). The Decentralized Identifiers (DIDs) specification is used to define the Identity layer in the Web 7.0 Messaging Superstack (see Figure 6 as well as Decentralized Identifiers (DIDs) as Barcodes for Secure, Trusted Digital Communication).

An agent remains dormant until it receives a message directed to it and returns to a dormant state when no more messages are remaining to be processed. An agent’s message processing can be paused without losing any incoming messages. When an agent is paused, messages are received, queued, and persisted in long-term memory. Message processing can be resumed at any time.

Additionally, an Agent can include a dynamically changing set of Coordination and Execution LOBEs. These LOBEs enable an Agent to capture events (incoming messages), compose responses (outgoing messages), and share these messages with one or more Agents (within a specific Neural Cluster or externally with the Beneficial Agent in other Neural Clusters (see Figure 5)).

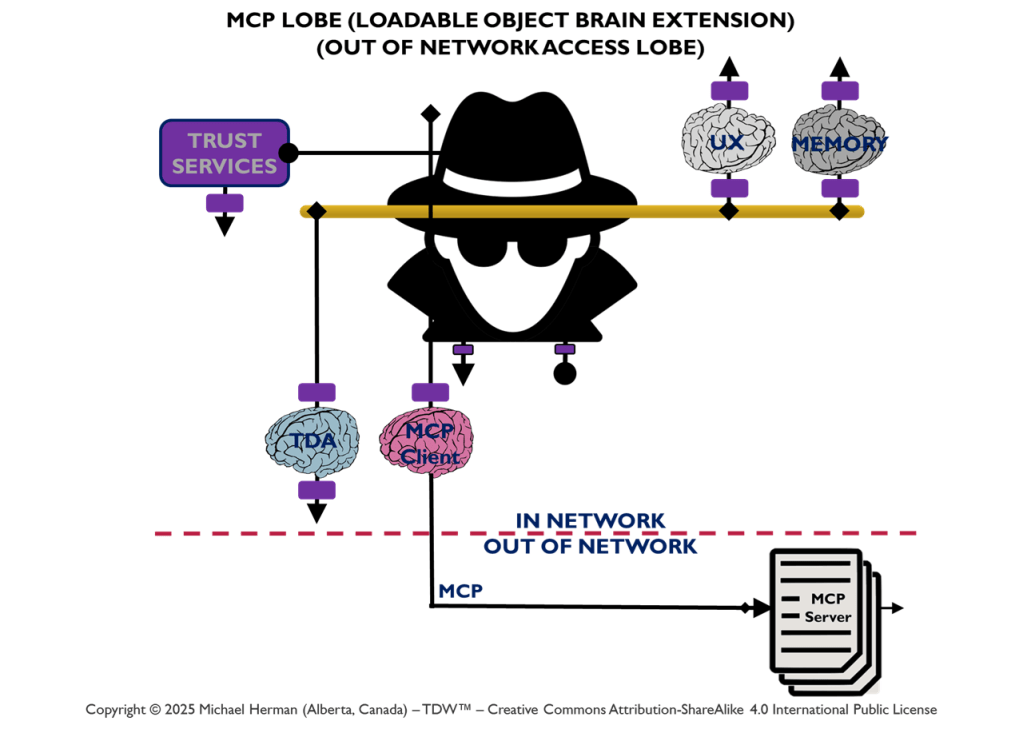

What is a LOBE?

LOBE (Loadable Object Brain Extensions) is a macromodular, neuromorphic intelligence framework designed to let systems grow, adapt, and evolve by making it easy to add new capabilities at any time. Each LOBE is a dynamically Loadable Object — a self-contained cognitive module that extends the Frontal LOBE’s functionality, whether for perception, reasoning, coordination, or control (execution). Together, these LOBEs form a dynamic ecosystem of interoperable intelligence, enabling developers to construct distributed, updatable, and extensible minds that can continuously expand their understanding and abilities.

LOBEs lets intelligence and capability grow modularly. Add new lobes, extend cognition, and evolve systems that learn, adapt, and expand over time. Expand your brain. A brain that grows with every download.

What is a NeuroPlex?

A Web 7.0 Neuroplex (aka a Neuro) is a dynamically composed, decentralized, message-driven cognitive solution that spans one or more agents, each with its own dynamically configurable set of LOBEs (Loadable Object Brain Extensions). Each LOBE is specialized for a particular type of message. Agents automatically support extraordinarily efficient by-reference, in-memory, intra-agent message transfers.

A Web 7.0 Neuroplex is not a traditional application or a client–server system, but an emergent, collaborative execution construct assembled from independent, socially-developed cognitive components (LOBEs) connected together by messages. Execution of a Neuroplex is initiated with a NeuroToken.

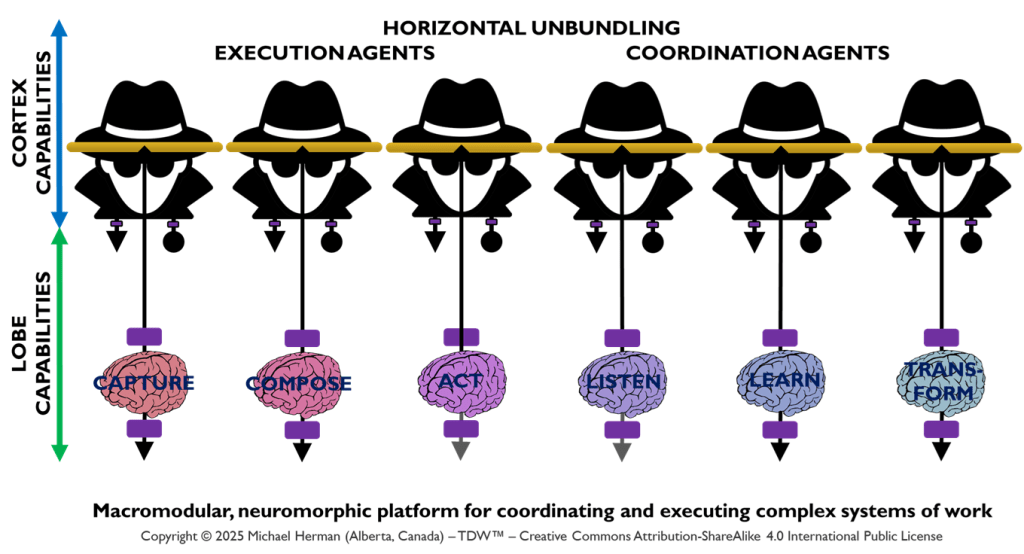

Horizontal Unbundling: Coordination and Execution Agents

Figure 2 illustrates how the deployment of Coordination and Execution LOBEs can be horizontally unbundled – with each LOBE being assigned to a distinct Frontal LOBE. This is an extreme example designed to underscore the range of deployment options that are possible. Figure 3 is a more common pattern.

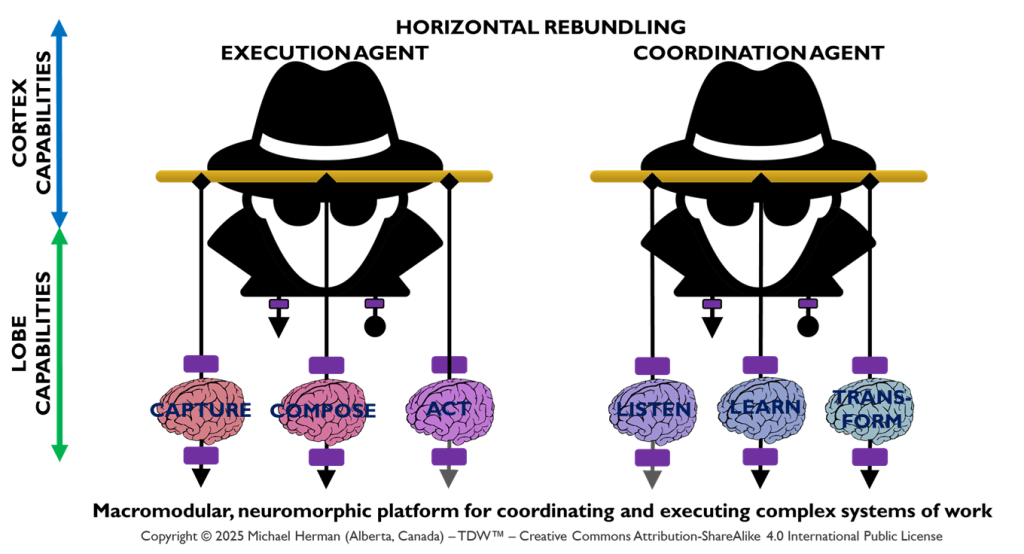

Horizontal Rebundling

Figure 3 depicts a more common/conventional deployment pattern where, within a Neural Cluster, a small, reasonable number of Frontal LOBEs host any collection of Coordination and/or Execution LOBEs.

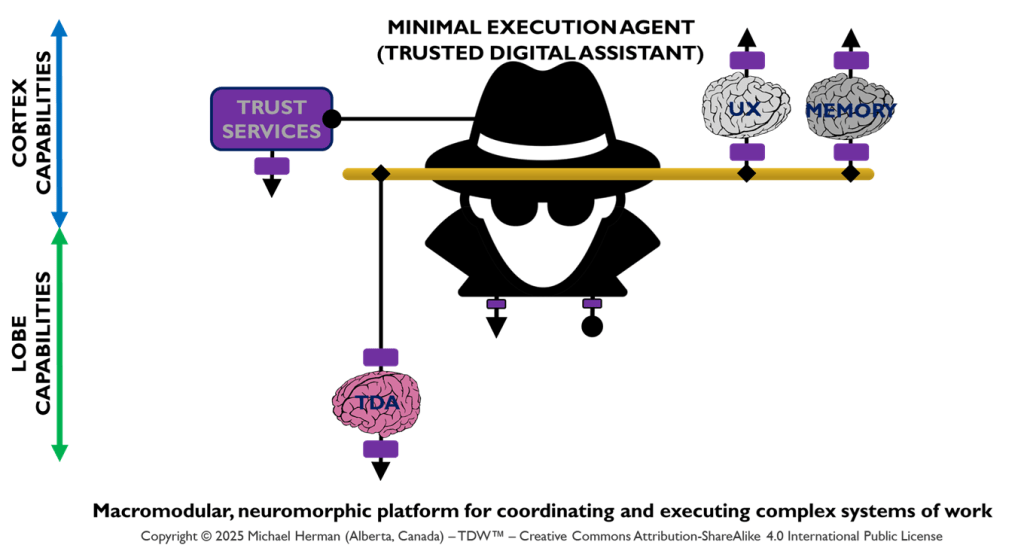

Minimal Execution Agent (Trusted Digital Assistant)

Figure 4a is an example of a minimal agent deployment pattern that hosts a single Trusted Digital Assistant (TDA) LOBE.

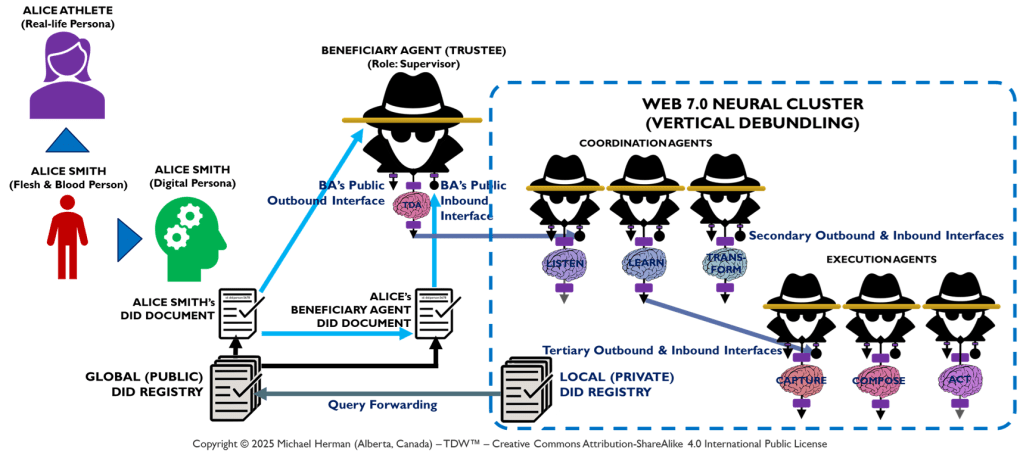

Vertical Debundling: Web 7.0 Neural Clusters

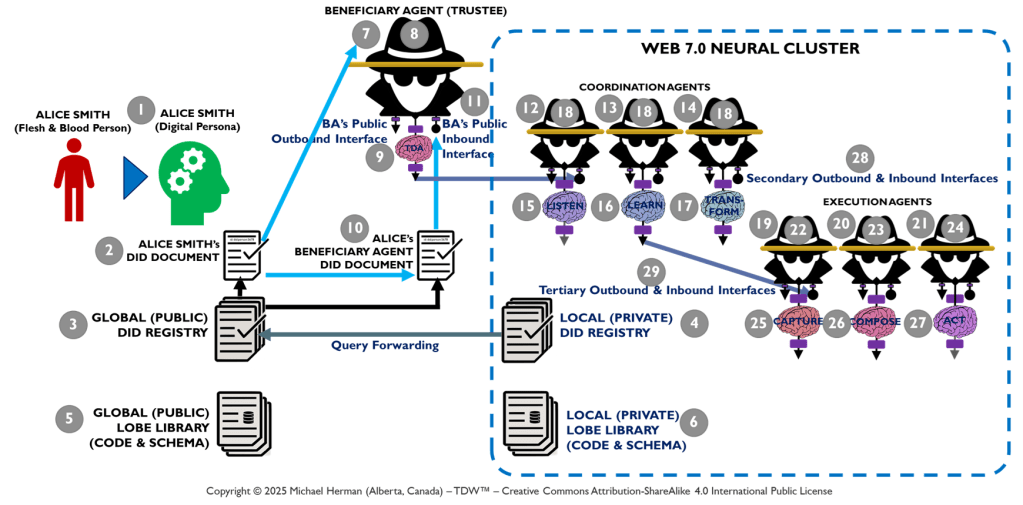

Figure 5 depicts the deployment of a Web 7.0 Neural Cluster. Messages external to the Neural Cluster are only sent/received from the Beneficial Agent. Any additional messaging is limited to the Beneficial, Coordination, and Execution LOBEs deployed within the boundary of a Neural Cluster. A use case that illustrates the Neural Cluster model can be found in Appendix D – PWC Multi-Agent Customer Support Use Case.

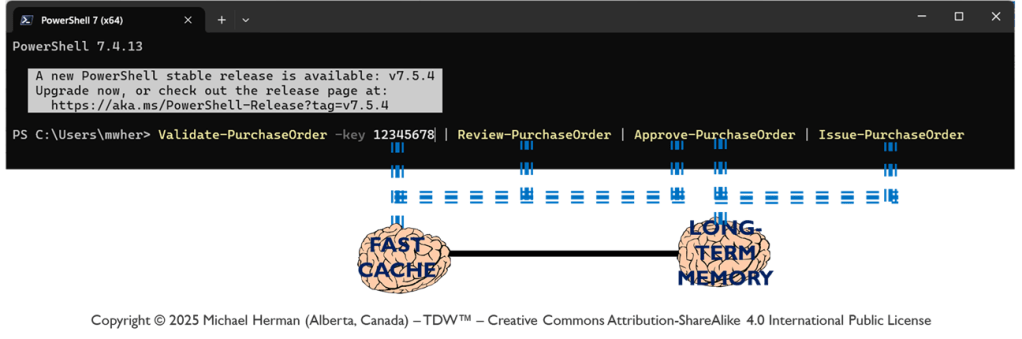

DIDComm 7.0

Figure 6a is an all-in illustration of the conceptual architecture of a Web 7.0 Neuromorphic Agent. DIDComm Messages can be piped from the Outbound Interface of the Sender agent to the Inbound Agent of of Receiver agent – supporting the composition of secure, trusted agent-to-agent pipelines similar (but superior) to: i) UNIX command pipes (based on text streams), and ii) PowerShell pipelines (based on a .NET object pump implemented by calling ProcessObject() in the subsequent cmdlet in the pipeline).

NOTE: PowerShell does not clone, serialize, or duplicate .NET objects when moving them through the pipeline (except in a few special cases). Instead, the same instance reference flows from one pipeline stage (cmdlet) to the next …neither does DIDComm 7.0 for DIDComm Messages.

Bringing this all together, a DIDComm Message (DIDMessage) can be passed, by reference, from LOBE (Agenlet) to LOBE (Agenlet), in-memory, without serialization/deserialization or physical transport over HTTP (or any other protocol).

| PowerShell | DIDComm 7.0 |

| powershell.exe | tdwagent.exe |

| Cmdlet | LOBE (Loadable Object Brain Extension) |

| .NET Object | Verifiable Credential (VC) |

| PSObject (passed by reference) | DIDMessage (JWT) (passed by reference) |

| PowerShell Pipeline | Web 7.0 Verifiable Trust Circle (VTC) |

| Serial Routing (primarily) | Arbitrary Graph Routing (based on Receiver DID, Sender DID, and DID Message type) |

Feedback from a reviewer: Passing DIDComm messages by reference like you’re describing is quite clever. A great optimization.

Coming to a TDW LOBE near you…

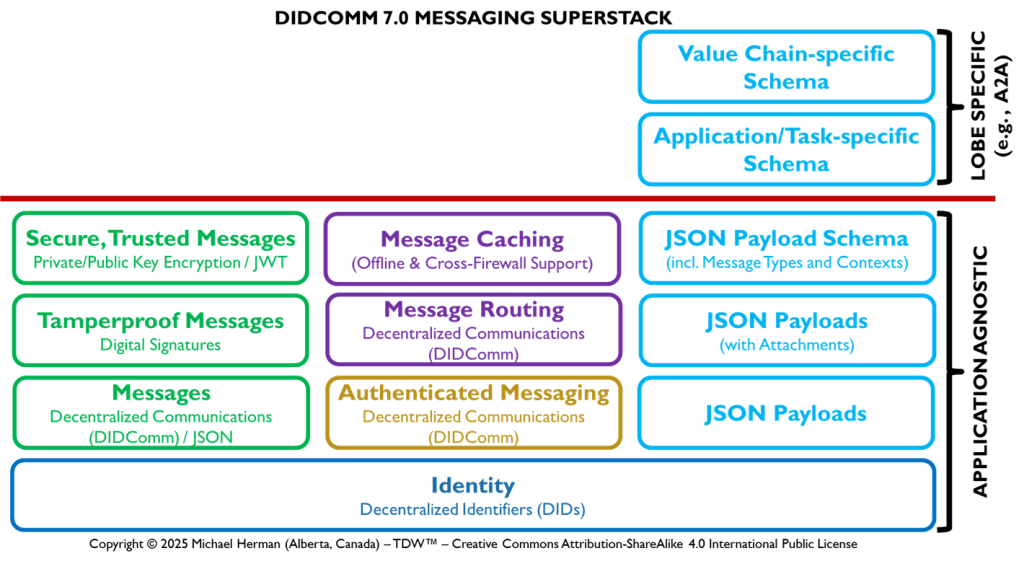

DIDComm 7.0 Superstack

Figure 6b illustrates the interdependencies of the multiple layers within the DIDComm 7.0 Superstack.

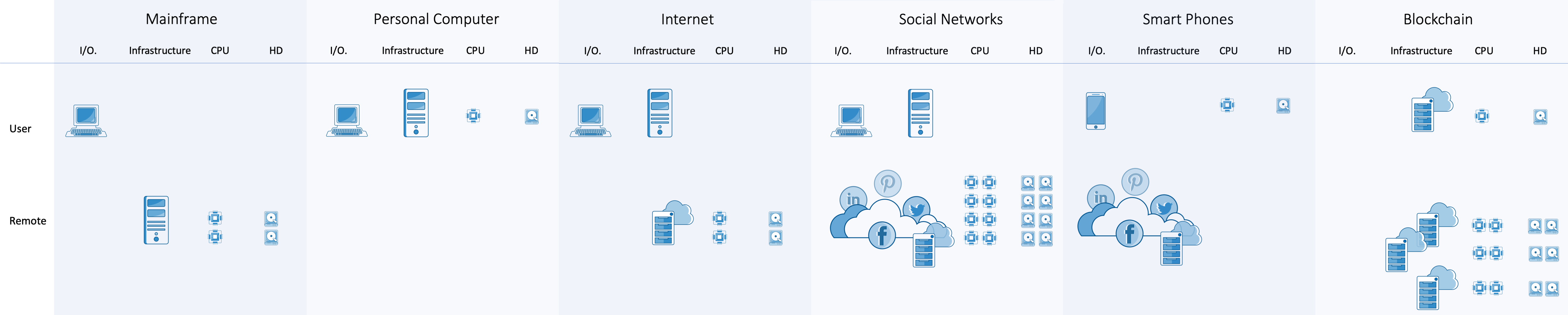

Technology Wheel of Reincarnation: Win32 generic.c

References

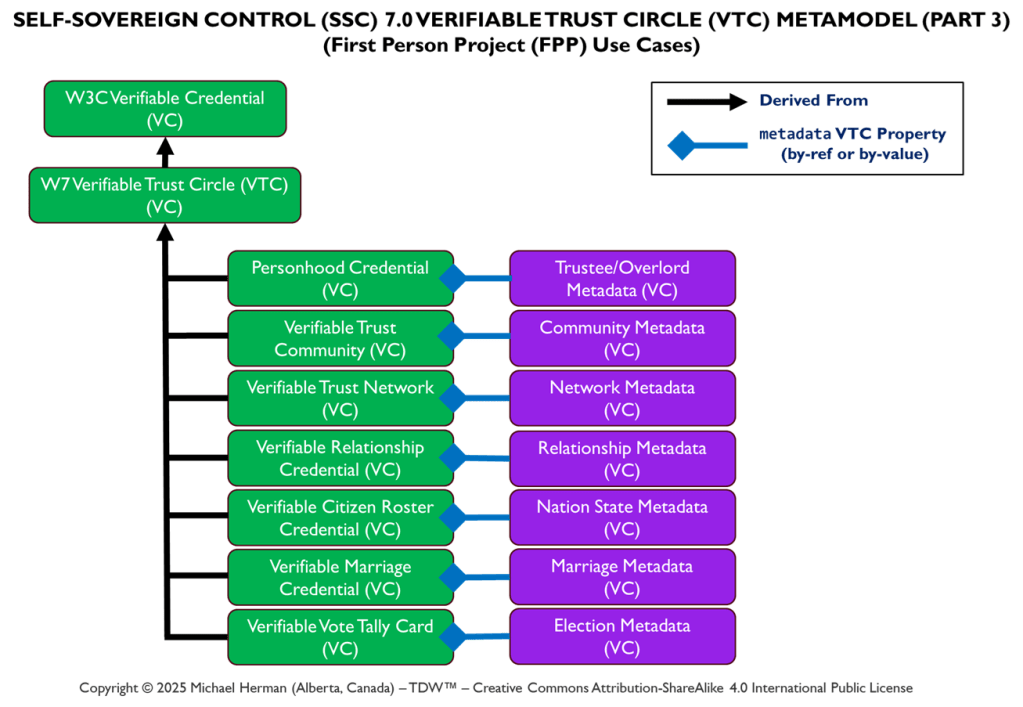

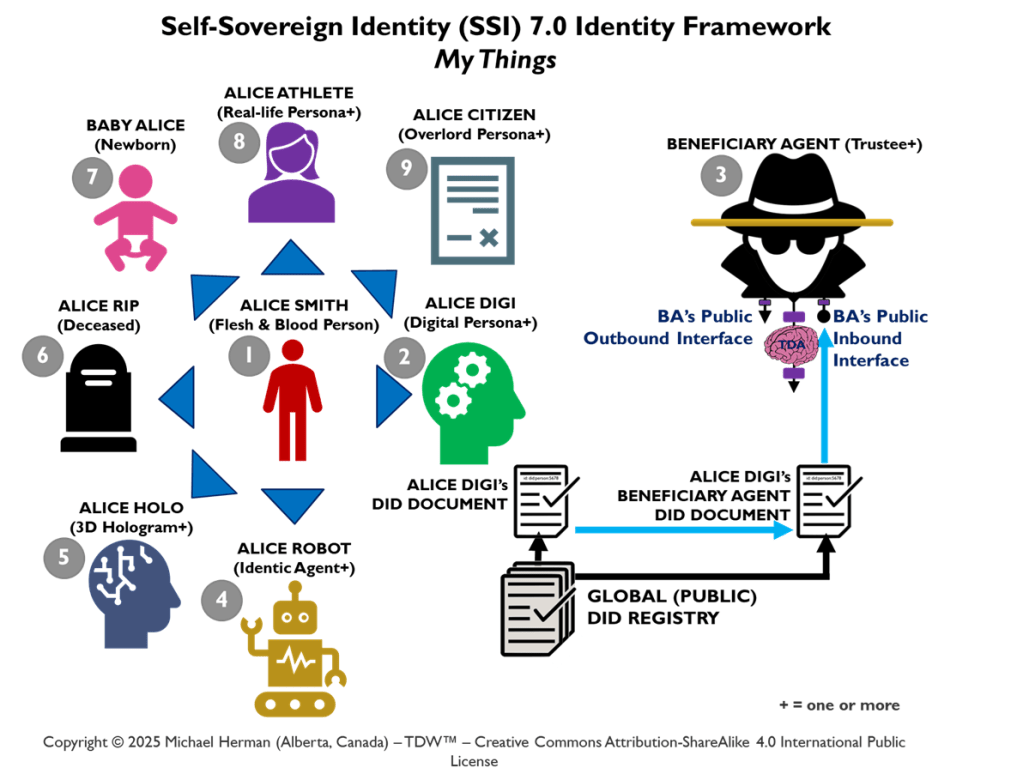

SSI 7.0 Identity Framework

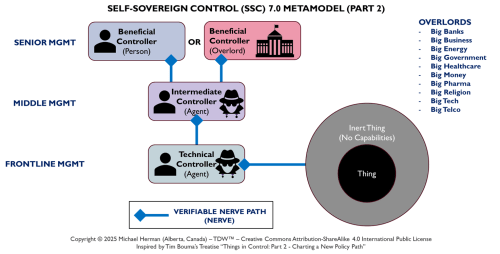

SSC 7.0 Metamodel

SSC 7.0 Verifiable Trust Circles

Web 7.0 Neuromorphic Agent Identity Model (NAIM)

Figure 7. Web 7.0 Neuromorphic Agent Identity Model (NAIM)

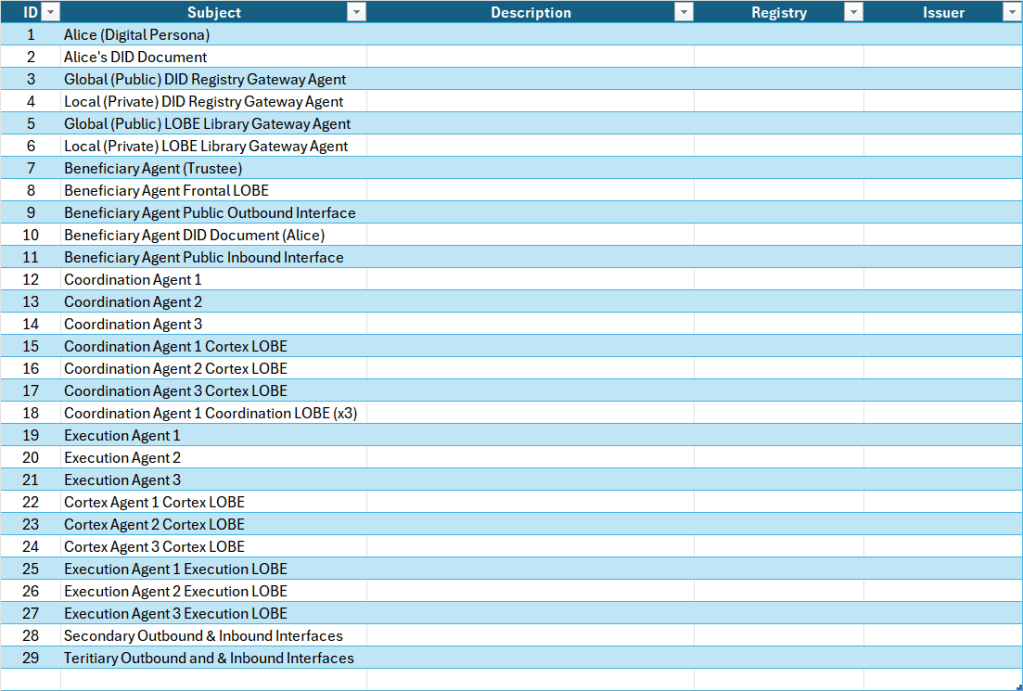

The NAIM seeks to enumerate and identify all of the elements in the AARM that have or will need an identity (DID and DID Document). This is illustrated in Figure 7.

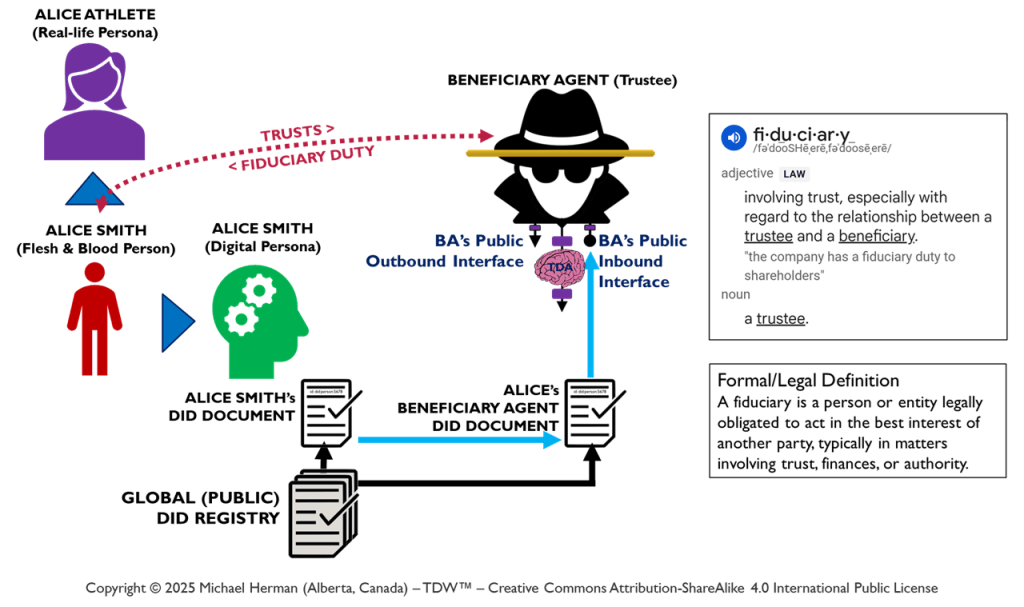

Beneficiaries, Trustees, and Fiduciary Duty

Figure 8 highlights in red the trusts and fiduciary duty relationships between (a) a Beneficiary (Alice, the person) and (b) her Beneificiary Agent (a trustee). Similarly, any pair of agents can also have pair-wise trusts and fiduciary duty relationships where one agent serves in the role of Beneficiary and the second agent, the role of Trustee.

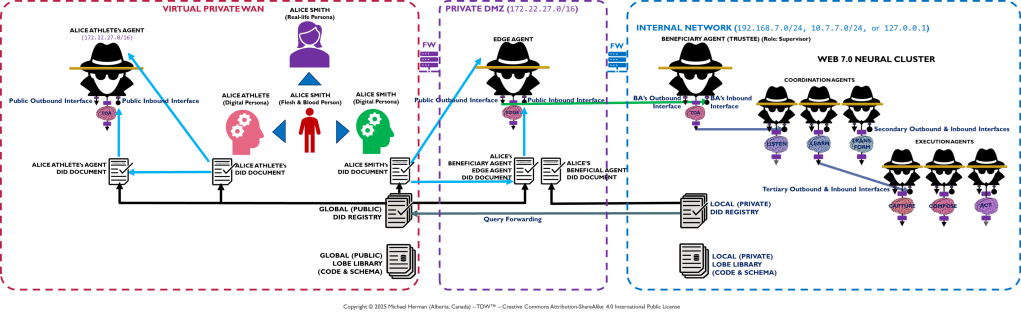

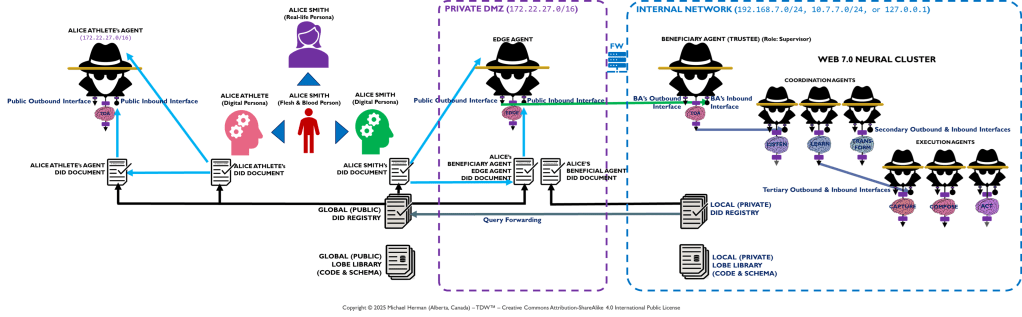

Appendix A – TDW AgenticOS: Edge Agent DMZ Deployment

This section is non-normative.

Appendix B – TDW AgenticOS: Multiple Digital Persona Deployment

This section is non-normative.

Alice has 2 digital personifications: Alice Smith and Alice Athlete. Each of these personifications has its own digital ID. Each of Alice’s personas also has its own Trusted Digital Assistant (TDA) – an agent or agentic neural network.

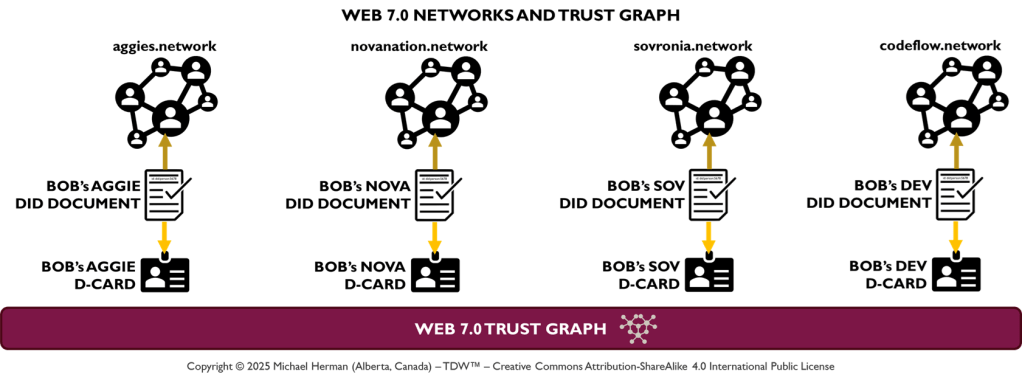

Bob has (at least) 4 digital personifications: Bob Aggie, Bob Nova, Bob Sovronia, and Bob Developer. Using Web 7.0 Trust Graph Relationships and Verifiable Trust Credentials (VTCs), Bob can also have personas that are members of multiple Web 7.0 networks.

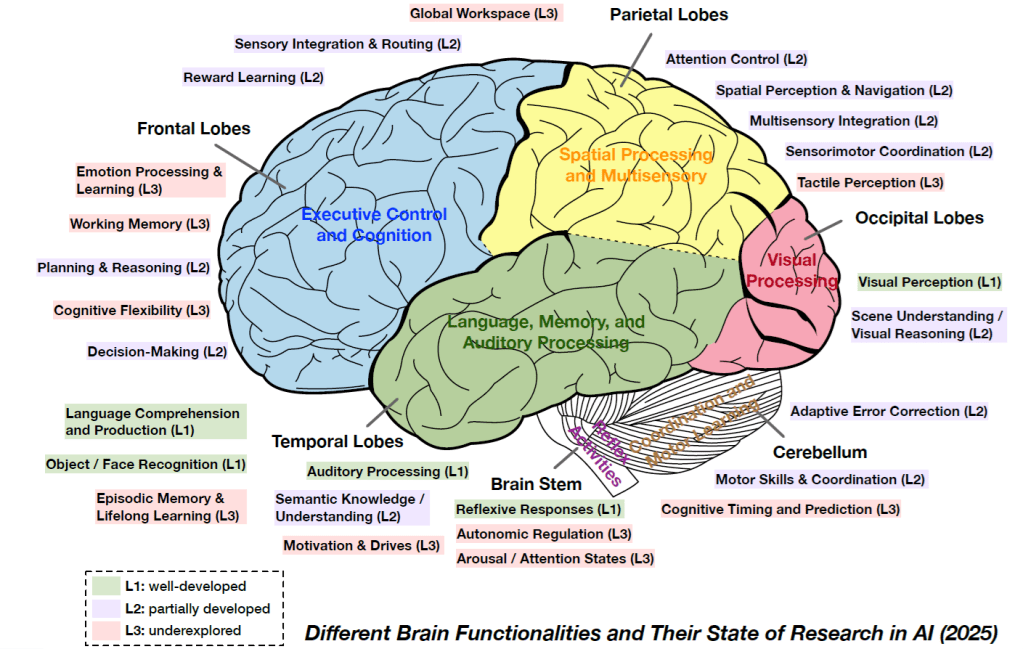

Appendix C – Different Brain Functionalities and Their State of Research in AI (2025)

Source: Advances and Challenges in Foundation Agents: From Brain-Inspired Intelligence to Evolutionary, Collaborative, and Safe Systems. arXiv:2504.01990v2 [https://arxiv.org/abs/2504.01990v2]. August 2025.

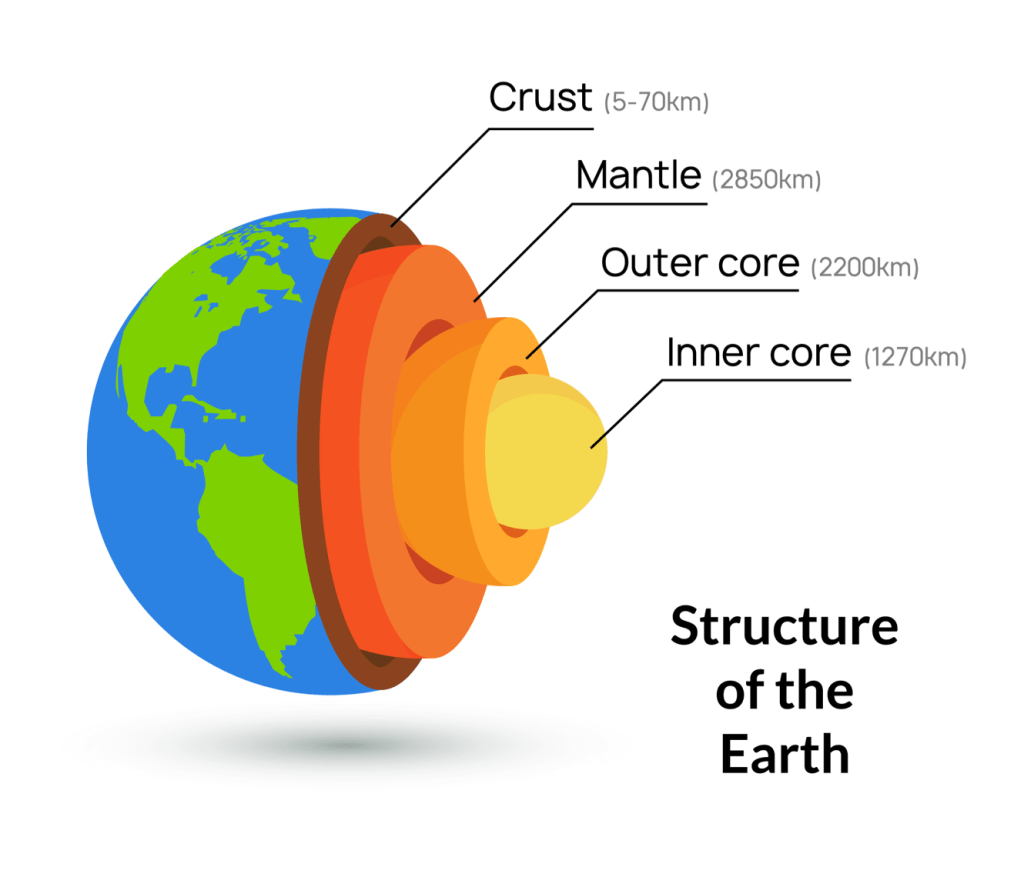

In Figure C-3, the Trust Library forms the Inner core and the UX LOBEs, the Crust. The Outer core is comprised of the Fast Cache and Long-Term Memory LOBEs, Neural and Basal Pathways, DID Registry, and LOBE Library. The Mantle is where the Coordination and Execution LOBEs execute.

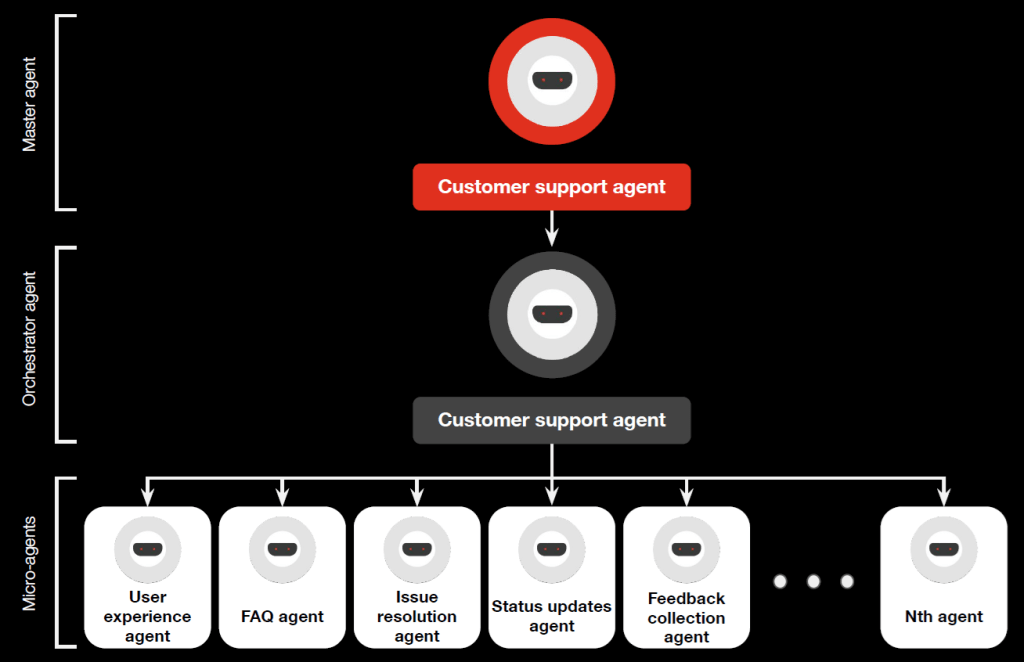

Appendix D – PWC Multi-Agent Customer Support Use Case

Source: Agentic AI – the new frontier in GenAI. PWC Middle East. 2024.

This use case exemplifies the use of the Web 7.0 Neural Cluster model. Table D-1 maps the PWC Use Case terminology to the corresponding Web 7.0 AARM terminology.

| Web 7.0 NAARM | PWC Use Case |

| Beneficiary Agent | Master agent |

| Coordination Agent (and LOBEs) | Orchestrator agent |

| Execution Agent LOBEs | Micro-agents |

Appendix E – Groove Workspace System Architecture

Resources

Macromodularity

- Organization of computer systems: the fixed plus variable structure computer. Gerald Estrin. 1960.

- Macromodular computer systems. Wesley Clark. 1967.

- Logical design of macromodules, Mishell J. Stucki et all. 1967.

Powered By

Michael Herman

Decentralized Systems Architect

Web 7.0 Foundation

October 15, 2025