Copyright © 2026 Michael Herman (Bindloss, Alberta, Canada) – Creative Commons Attribution-ShareAlike 4.0 International Public License

Abstract

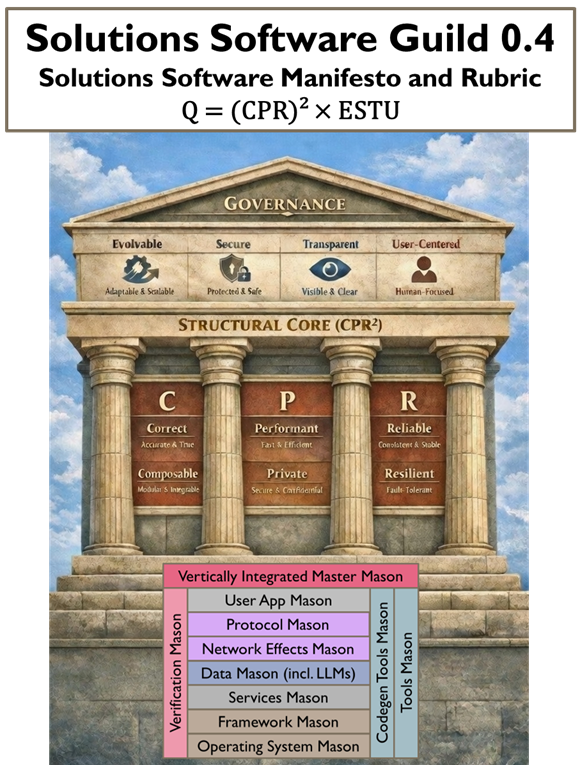

This short paper introduces a quantitative model of solutions software quality defined as Q = (CPR)^2 × G, where CPR represents structural engineering strength and G represents governance strength. The model formalizes the nonlinear contribution of engineering integrity and the linear moderating role of governance. By separating structural properties from governance mechanisms and applying geometric aggregation to prevent compensatory inflation, the framework (in the form of a rubric) establishes a mathematically grounded theory of structural primacy in solutions software systems.

Intended Audience

The intended audience for this paper is a broad range of professionals interested in furthering their understanding of solutions software quality as it related to software apps, agents, and services. This includes software architects, application developers, and user experience (UX) specialists, as well as people involved in software endeavors related to artificial intelligence; including:

- Operating System Masons

- Framework Masons

- Services Masons

- Data Masons (including the developement of LLMs)

- Network Effects Masons

- Protocol Masons

- User-face App Masons

- Tools Masons

- Codegen Tools Masons

- Verification Masons

- Veritically Integreated Master Masons

Solutions Software Quality Principles

The Solutions Software Quality Principles represent a linear list of orthogonal spanning set of solutions software quality metrics:

- Correctness: Software produces outputs that correspond to reality and specification.

- Composable: Can be safely combined with other systems through clean interfaces.

- Performant: How efficiently does the system use time and resources to achieve its function.

- Privacy-First: Minimizes unnecessary data exposure and maximizes user control over data.

- Reliable: Produces expected results consistently over time.

- Resilient: Maintains function under stress, degradation, or partial failure.

- Evolvable: Designed to adapt over time without collapse.

- Secure: Resists unauthorized access, misuse, and adversarial compromise.

- Transparent: Observable, auditable, and understandable.

- User-Centered: Optimized for human goals, comprehension, and agency.

Orthogonal Major and Minor Axes

The following categorization is presented for explanatory purposes. It represents a two-level orthogonal spanning set of the SSQ Quality Principles.

Spatial

- Composable

- User-Centered

Temporal

- Evolvable

- Reliable

- Resilient

Integrity

- Correctness

- Secure

- Privacy-First

Efficiency

- Performant

Observability

- Transparent

Structural Core

The Structural Core of a solutions software system is the set of fundamental engineering dimensions that ensure its integrity, stability, and robustness. It encompasses the interrelated principles of correctness, composability, performance, privacy, reliability, and resilience—forming the foundational “skeleton” upon which all other governance and user-facing features depend.

The structural core is the backbone of a system, combining correctness, composability, performance, privacy, reliability, and resilience to create a stable, robust foundation for all other features and governance mechanisms.

Structural Core Quality Score (CPR)

The structural core quality score (CPR) consists of three geometric pairings:

C = √(Correctness × Composable)

P = √(Performant × Privacy-First)

R = √(Reliable × Resilient)

The aggregate structural core quality is computed as the geometric mean of C, P, and R:

CPR = ³√(C × P × R)

The use of geometric means penalizes imbalance and prevents compensatory scoring. Structural core quality is then squared to reflect compounding architectural leverage: (CPR)^2.

Structural Core Principles (CPR) Relationships and Interactions

C = Correctness + Composable → Logical & Structural Integrity

- Correctness – Software does what it is supposed to do.

- Composable – Software can safely integrate with other systems.

Metaphor: “A brick must be solid and fit with others to build a stable wall.”

Reasoning: A system is only structurally sound if it is both right and integrable.

P = Performant + Privacy-First→ Practical Operational Quality

- Performant – Efficient, fast, and resource-conscious.

- Privacy-First – Protects sensitive data and respects boundaries.

Metaphor: “A car must move fast and lock its doors; speed without safety or safety without speed is useless.”

Reasoning: A system is only usable in real life if it is both efficient and safe.

R = Reliable + Resilient → Temporal Robustness

- Reliable – Works consistently under normal conditions.

- Resilient – Survives and recovers from stress, failures, or unexpected events.

Metaphor: “A bridge must stand every day and survive storms to be truly dependable.”

Reasoning: Robustness requires both steady performance and the ability to handle disruption.

Solutions Software Governance (G)

Governance refers to the set of guiding mechanisms that ensure a solutions software system evolves responsibly, remains secure, operates transparently, and serves real user needs. In this context, governance does not create structural strength; rather, it moderates and scales the impact of the structural core over time.

Governance is the institutional layer that guides how a system adapts, secures itself, exposes accountability, and remains user-centered—multiplying the value of structural strength without substituting for it.

Software Governance Quality Score

Governance is modeled as the arithmetic mean of four moderating dimensions:

G = (E + S + T + U) / 4

Where E = Evolvable, S = Secure, T = Transparent, and U = User-Centered. Governance acts as a multiplier rather than an exponent, reflecting its role in scaling engineering outcomes rather than creating them.

Software Governance Principles

E = Evolvable → Adaptation over Time

- Can change, improve, or scale without collapsing.

Metaphor: “A building designed for future expansion is more valuable than one that cannot adapt.”

S = Secure → Protection Against Threats

- Guards against unauthorized access or exploitation.

Metaphor: “A fortress is only valuable if the gates are locked against intruders.”

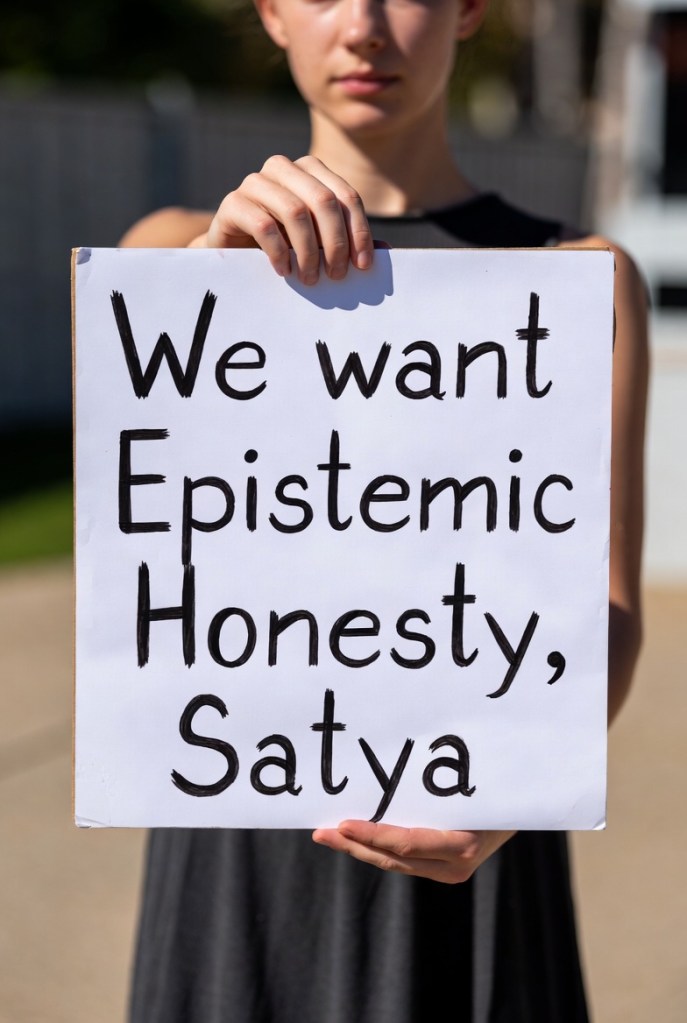

T = Transparent → Observability & Accountability

- Understandable, auditable, and explainable.

Metaphor: “A window in the wall lets people see what’s happening inside; without it, trust is impossible.”

U = User-Centered → Human Alignment

- Designed with human needs, goals, and comprehension in mind.

Metaphor: “A tool is only useful if people can actually use it.”

Solutions Software Quality Total Score (Q)

The complete quality equation is defined as:

Q = (CPR)^2 × G

Taking partial derivatives demonstrates structural dominance:

∂Q/∂CPR = 2CPR × G

∂Q/∂G = (CPR)^2

For sufficiently strong systems, marginal improvements in structural strength produce greater gains in quality than equivalent improvements in governance.

Organizational Implications

1. Architectural investment yields nonlinear returns.

2. Governance without structure produces superficial quality.

3. High-maturity systems institutionalize structural discipline.

4. Structural shortcuts square long-term risk.

Future Work

Future empirical validation may include retrospective scoring across systems, incident correlation studies, and longitudinal tracking of structural improvements versus governance enhancements.

Conclusion

Solutions software quality behaves quadratically with structural engineering integrity and linearly with governance integrity. This establishes the foundation of the Solutions Software Quality Rubric:

Quality equals the square of engineering strength multiplied by governance strength ((CPR)^2 ESTU).

Appendix A: Solutions Software Quality Rubic

Structural Core (CPR)² × Governance (ESTU)

Scoring scale (per principle):

| Score | Meaning |

|---|---|

| 0 | Absent / actively harmful |

| 1 | Minimal / inconsistent |

| 2 | Adequate but fragile |

| 3 | Strong and reliable |

| 4 | Excellent / exemplary |

| 5 | Industry-leading / best-in-class |

Structural Core (CPR)

These are multiplicative: weakness in one materially degrades overall quality.

C — Correctness

- Produces accurate results

- Meets specification

- Deterministic where required

- Comprehensive test coverage

- Clear validation of edge cases

C — Composable

- Modular components

- Clear interfaces

- Low coupling, high cohesion

- Reusable abstractions

- Integrates cleanly with other systems

P — Performant

- Meets latency/throughput targets

- Efficient resource usage

- Scales predictably

- No unnecessary bottlenecks

- Measured and monitored performance

P — Privacy-first

- Minimizes data collection

- Data use aligned with purpose

- Proper access controls

- Data lifecycle management

- Encryption and isolation practices

R — Reliable

- Consistent uptime

- Predictable behavior

- Low defect rate

- Effective monitoring

- Fast mean time to recovery

R — Resilient

- Graceful degradation

- Fault tolerance

- Redundancy where appropriate

- Handles partial failures safely

- Recovery mechanisms tested

Governance (ESTU)

These modulate long-term sustainability and trust.

E — Evolvable

- Easy to extend or modify

- Backward compatibility strategy

- Clear versioning practices

- Refactor-friendly design

- Maintains architectural integrity over time

S — Secure

- Threat modeling performed

- Least privilege enforced

- Strong authentication

- Regular audits and patching

- Defense-in-depth strategy

T — Transparent

- Observable and auditable

- Clear documentation

- Traceable decision logic

- Honest communication about limitations

- Accessible logs and metrics

U — User-Centered

- Solves real user problems

- Usability tested

- Clear UX flows

- Accessibility considered

- Feedback loops incorporated

Scoring Model

Structural Core Score (CPR)

C = √(Correctness × Composable)

P = √(Performant × Privacy-First)

R = √(Reliable × Resilient)

CPR = ³√(C × P × R)

Governance Score (G)

Total Quality Score (Q)

Q = (CPR)^2 × G

This preserves the insight:

- Core weaknesses compound.

- Governance moderates sustainability.

- High performance without security (or correctness without reliability) cannot yield high Q.

Appendix B – Solutions Software Guild Manifesto (Elevator Pitch)

Solutions Software Quality Rubric ((CPR)^2 ESTU)

Overall solutions software quality grows quadratically with structural engineering strength and only linearly with governance quality.

Formally:

Corollaries:

- Governance cannot compensate for structural weakness.

- Structural excellence amplifies governance effectiveness.

- Imbalance within any structural pair (C, P, or R) degrades total quality multiplicatively.

- Sustainable software is governed engineering — but engineered first.

Strategic Interpretation

- If CPR is low: Even perfect governance yields low Q.

- If CPR is high but G is low: The system is powerful but dangerous or unstable long-term.

- If both are high: You get durable, scalable, trustworthy systems.